On-premise syncing

Here’s all you need to know about on-premise syncing with Stock2Shop.

In the below documentation we will be covering:

What is on-premise data syncing?

If your source of data (usually an ERP / accounting system) is installed on a server that is situated at your office (or in the cloud), this would constitute an on-premise integration.

To sync from an on-premise source, we typically require that a piece of our software (Apifact) is installed on your server which pushes updates to Stock2Shop. The Apifact software scans your database periodically in search of changes in product and customer data. These changes are flagged and sent as updates to the Stock2Shop console.

How does it work?

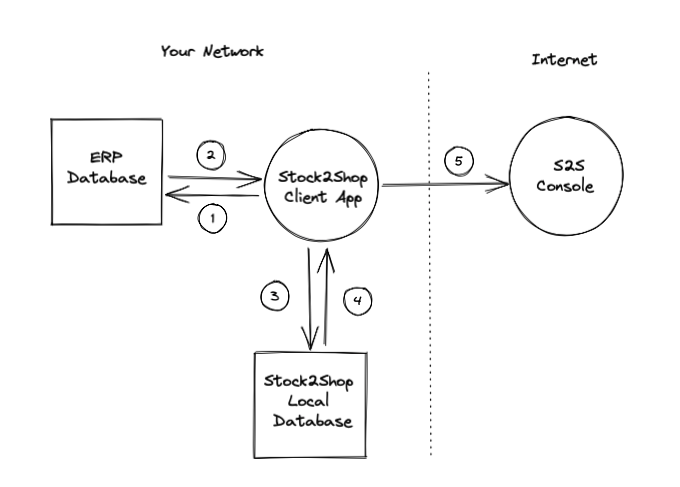

Every three minutes, the Stock2Shop Apifact software will ask your source (ERP / accounting system) for data. This is typically done in chunks (e.g. 1,000 rows every three minutes). Data returned from your source are then inserted into a local database on your server, and all changes are flagged. Only the changes are then sent to the internet (the Stock2Shop console), where it is distributed to relevant online sales channels.

Below is a visual representation of this process with the steps labelled:

- (1) Stock2Shop’s application (Apifact) asks your ERP / accounting system for data.

- (2) Your ERP / accounting returns this data to Stock2Shop’s application (Apifact) on your server.

- (3) This data is then inserted into a local database on your server.

- (4) Only products that have changed will be synced.

- (5) The products are pushed from Stock2Shop’s application on your server over to your Stock2Shop console on the internet.

How long does it take to sync data?

Stock2Shop interrogates a chunk of 1,000 rows (products) every 3 minutes, so a greater number of products will take longer to process.

Consider an example where you have 5,000 items to sync. First we need to calculate how many chunks or batches of products there are, and looks like this:

- 5,000 (total) / 1,000 = 5 chunks

Since we are looking at 1,000 items every 3 minutes, it will take 15 minutes (5 chunks x 3 mintues) to go through all your products on your data source.